|

I am a first-year Ph.D. student at Multimedia Lab of The Chinese University of HongKong. I focus on foundation models in multimodal learning and Ai for Science. Prior to MMLab, I received my B.S. degree from Beijing Institute of Technology in 2023. I worked as a research intern at Megvii (Face++), Alibaba Group, and Shanghai AI Lab. Email / Google Scholar / Github / Zhihu |

|

News

|

ResearchI'm interested in Multimoda Foundation Models (Architectural Designs/ Pretraining Framework/ Generative Models). And I'm also curious about research topics of Ai for Science. (Researh cooperation is welcomed, please drop me an email.) |

|

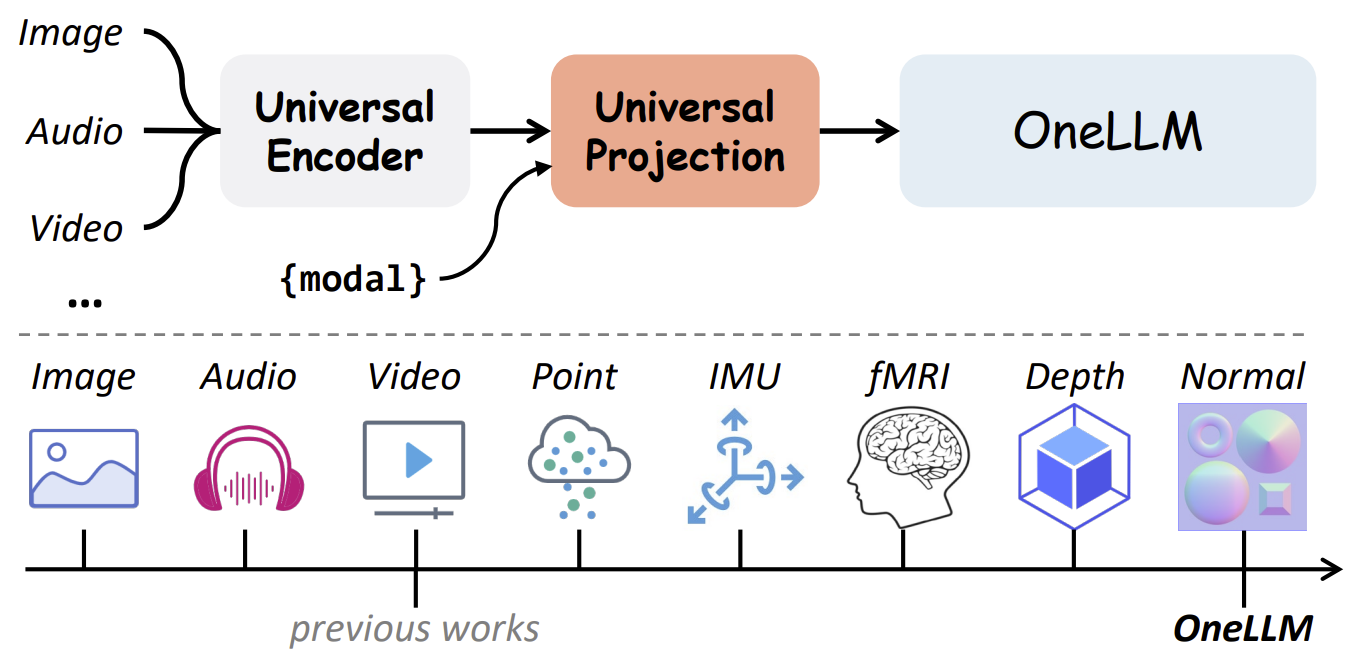

Yiyuan Zhang*, Kaixiong Gong*,Kaipeng Zhang†, Hongsheng Li, Yu Qiao, Wanli Ouyang, Xiangyu Yue† Preprint , 2023 Single foundation model handles 12 modalities and supports a wide range of applications. |

|

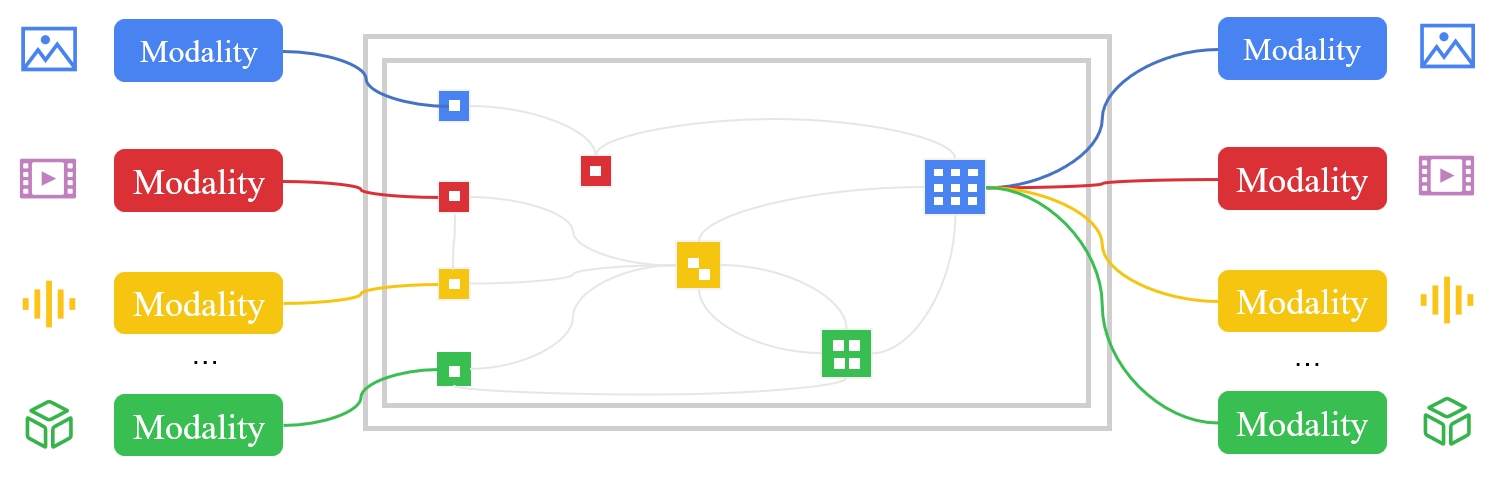

Yiyuan Zhang, Xiaohan Ding, Kaixiong Gong, Yixiao Ge, Ying Shan, Xiangyu Yue† CVPR , 2024 A flexible multimodal architectures, to reveal modalities interaction and benefits, early exploration in multimodal unpaired data. |

|

Xiaohan Ding*, Yiyuan Zhang*, Yixiao Ge, Sijie Zhao, Lin Song, Xiangyu Yue, Ying Shan CVPR , 2024 The first CNN-based achtecture for general-purpose multimodal learning, highlighting the universal perception ability. |

|

Jiaming Han, Kaixiong Gong, Yiyuan Zhang, Jiaqi Wang, Kaipeng Zhang, Dahua Lin, Yu Qiao, Peng Gao, Xiangyu Yue CVPR , 2024 A simple framework to align 8 modalities with large language models. |

Services |

Awards and Honors |

Teaching |

|

|