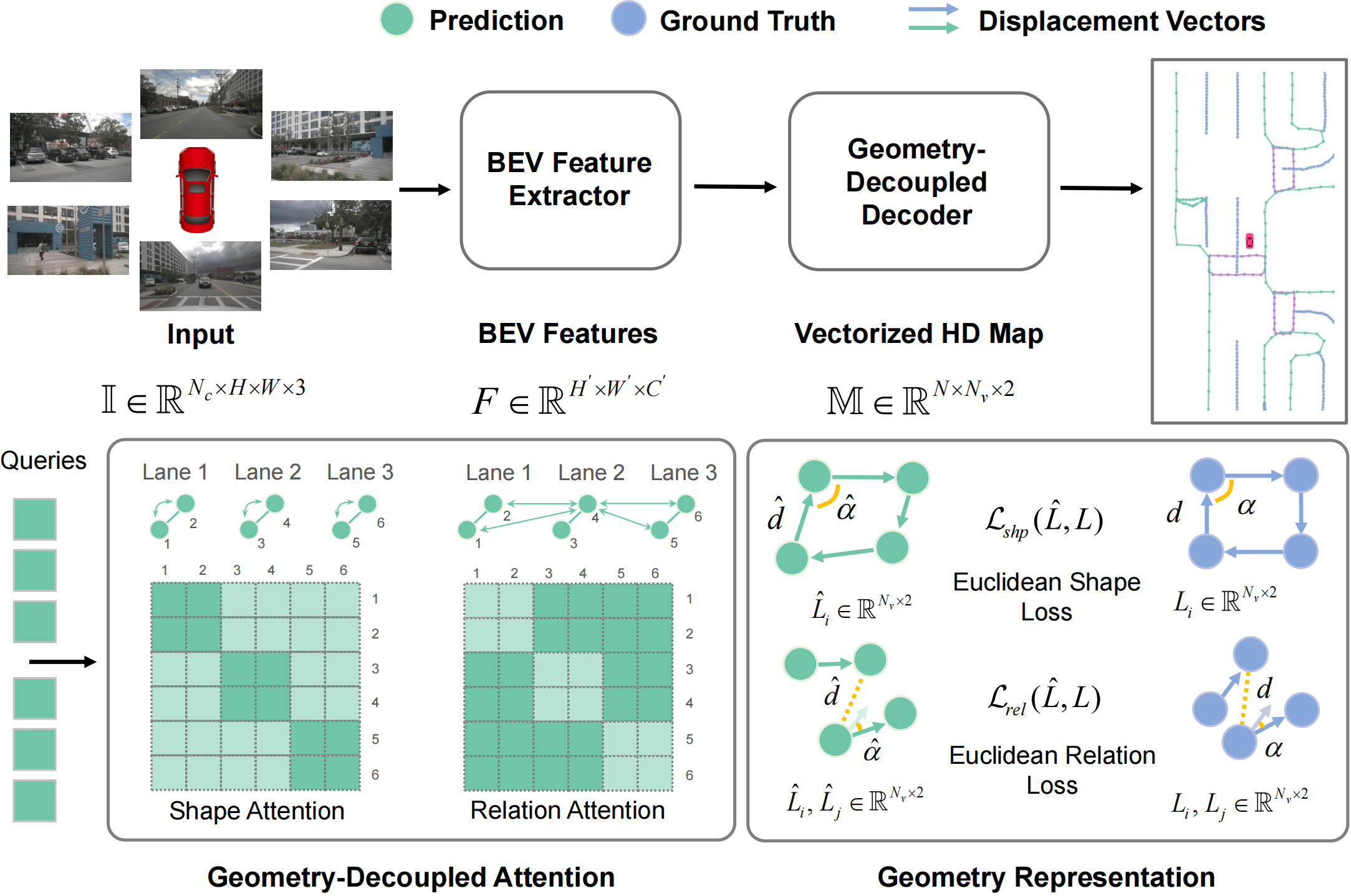

Illustration of GeMap framework. First, Perspective View (PV) images are transformed into Bird's-Eye-View (BEV) features, then a geometry-decoupled decoder outputs the vectorized HD Map. In each block of the decoder, queries are firstly processed by Euclidean shape and relation attention, which focuses on geometric relevance. Finally, predictions are enhanced in G-Representations by shape and relation constraint.

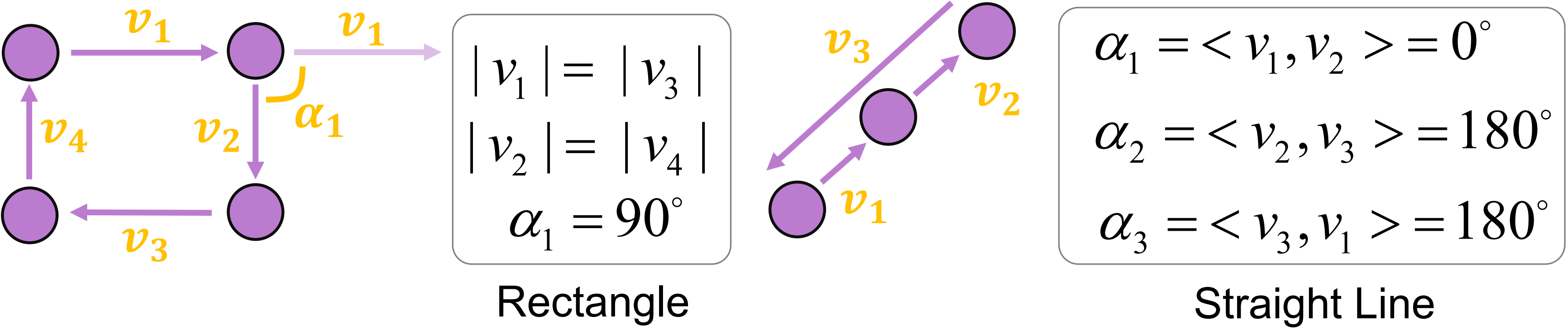

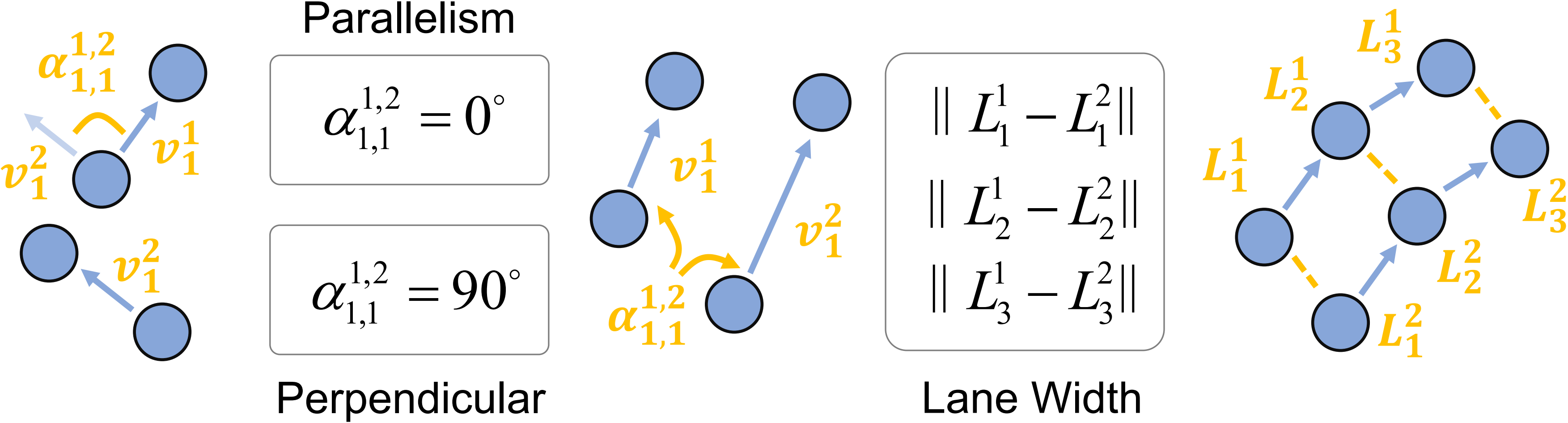

Euclidean shape clues. Lengths of displacement vectors and angles between neighboring vectors consist of shape clues and are utilized to compute shape loss. As an example, the rectangle-shape is related to a 90 degree angle and two equal sides.

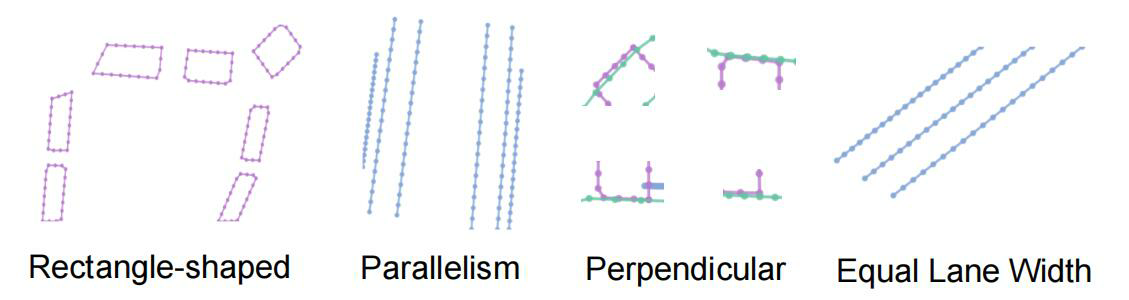

Euclidean relation clues. Distances between pairs of points and angles between pairs of displacement vectors indicate relation clues. The relation clues are more superficially connected to Euclidean relation geometry, such as the parallelism or perpendicular.

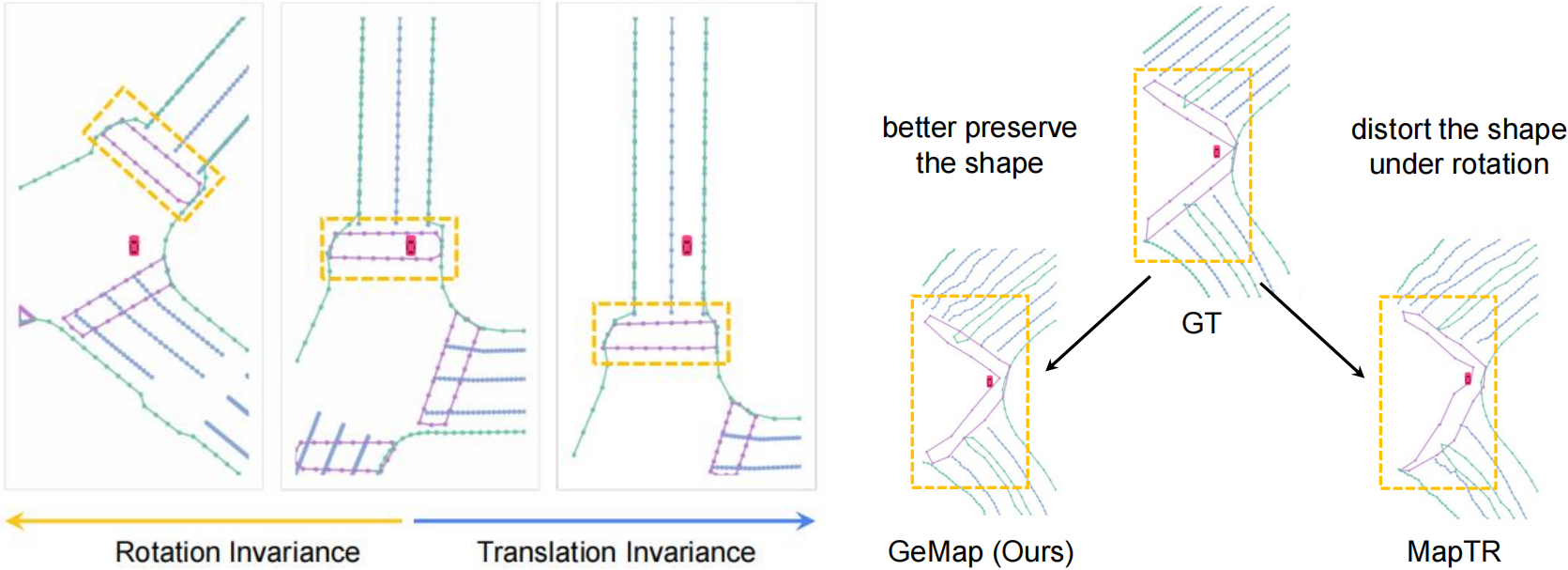

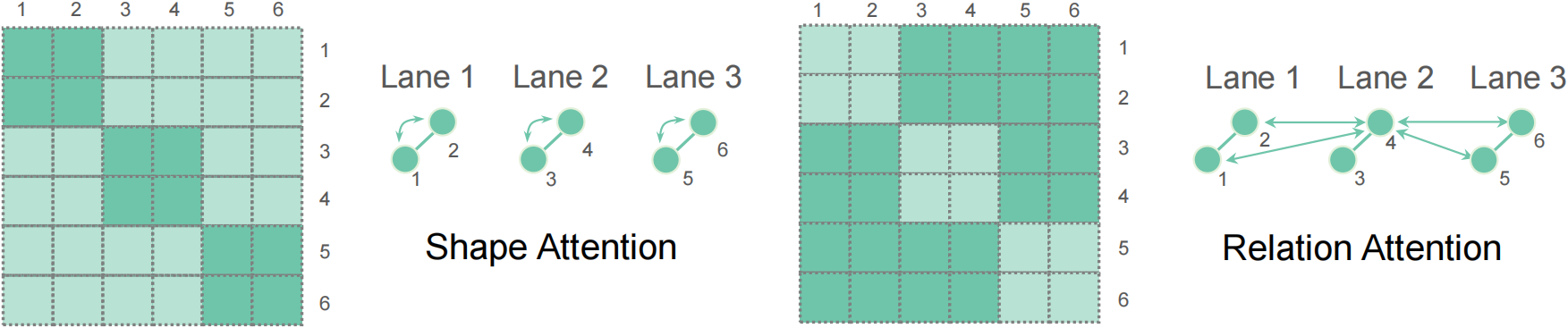

The geometry of shape and relation pertains to distinct subsets of tokens. For any given map instance, its shape is intimately related to tokens representing that instance's points. Precise shape geometry capture requires the model to discern token correlations specific to an instance while avoiding interference from tokens of unrelated instances. Conversely, for relation geometry modeling, it is beneficial to isolate token correlations that span across different instances, rather than those confined within a single instance.

Therefore, we propose Geometry-Decoupled Attention (GDA). GDA decouples the vanilla self-attention module into the Euclidean shape attention (captures intra-instance geometry) and Euclidean relation attention (captures inter-instance geometry).

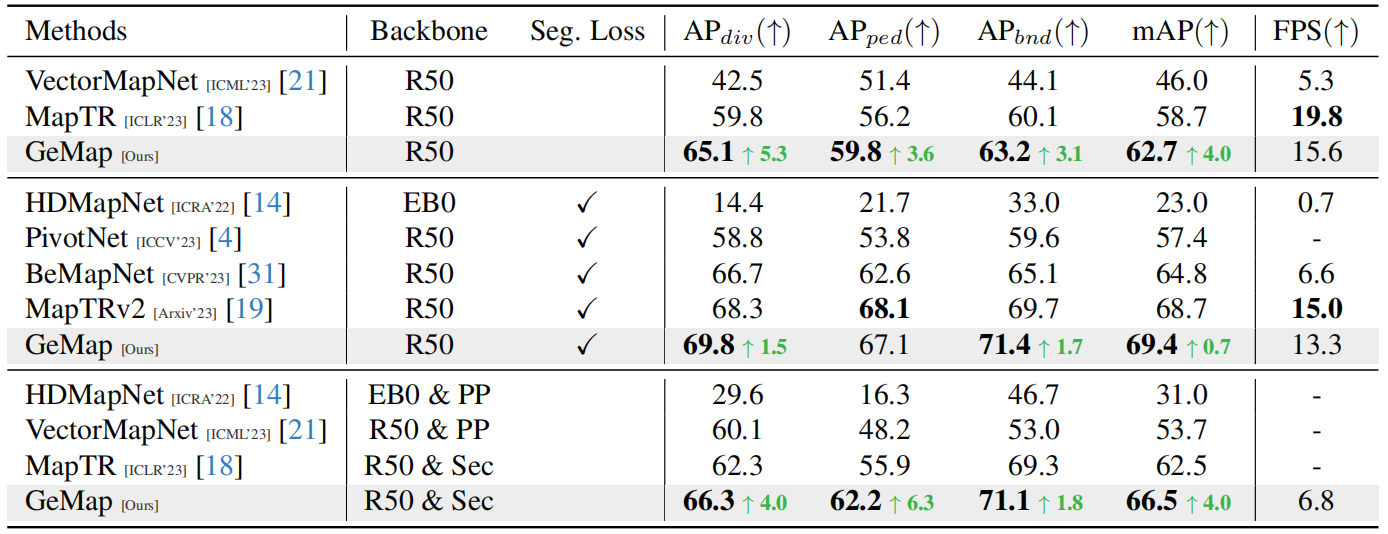

Comparison on the NuScenes dataset and our method reaches a new state-of-the-art performance. "EB0", "R50", "PP", "Sec", denote EfficientNet-B0, ResNet50, PointPillars, SECOND respectively. Methods with two backbones utilize both camera and LiDAR inputs. "Seg. Loss" denotes whether any segmentation-based loss is adopted. The best result is highlighted in bold. We reproduce different methods on a single RTX3090 GPU to test FPS for fair comparison.

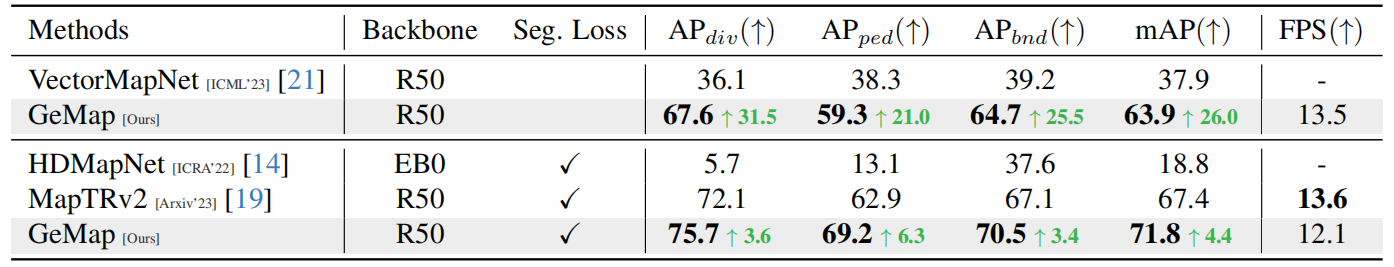

Comparison on the Argoverse 2 dataset. Our method demonstrates significant performance improvements over previous methods.

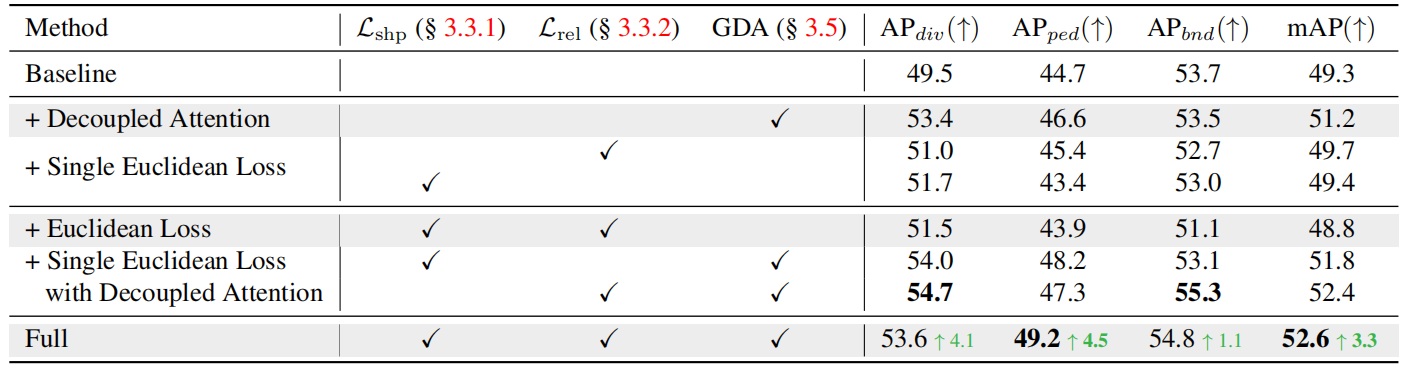

Ablation study on the NuScenes dataset. For fast ablation, we only train the model for 24 epochs and use simplified objectives.

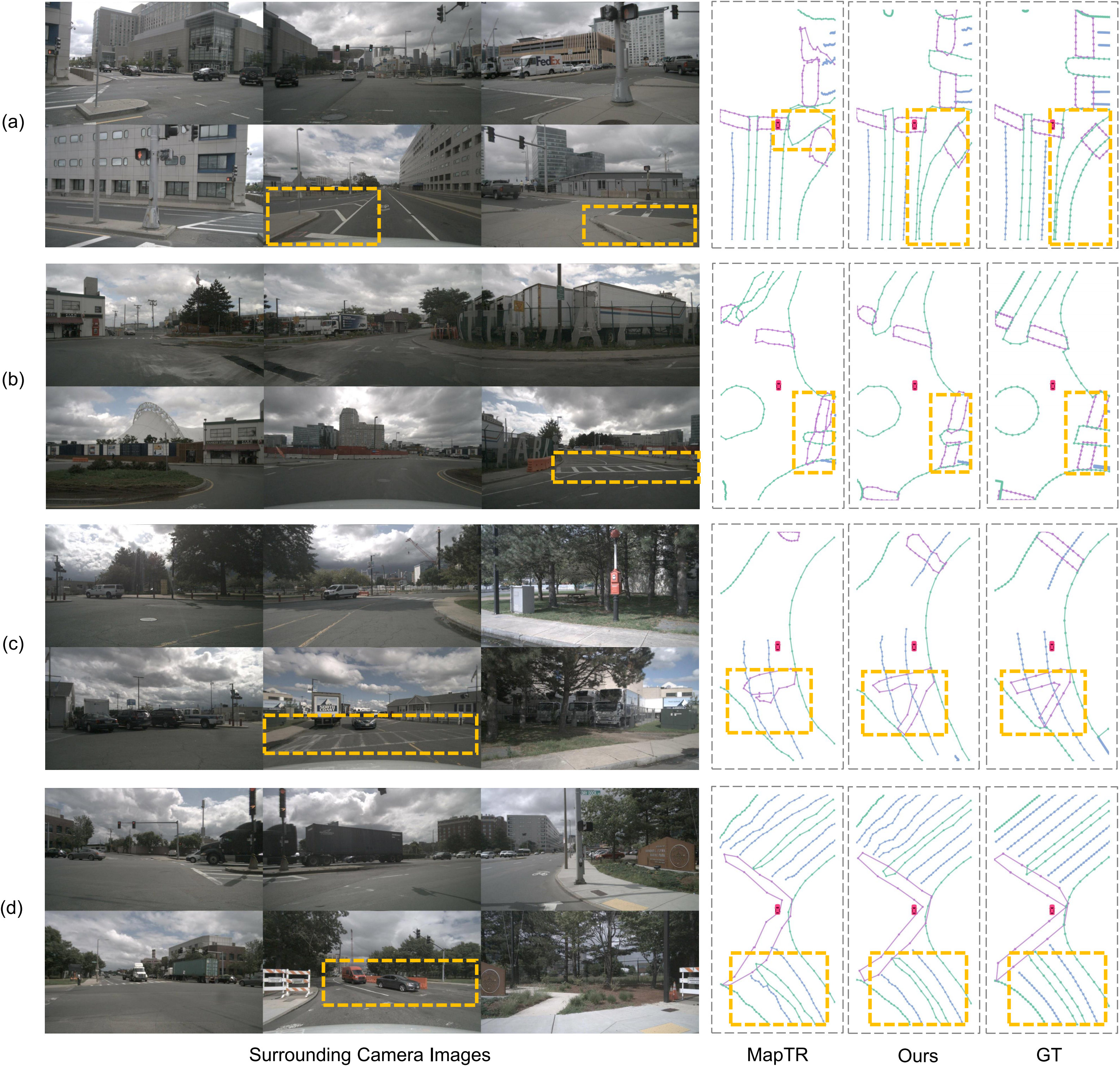

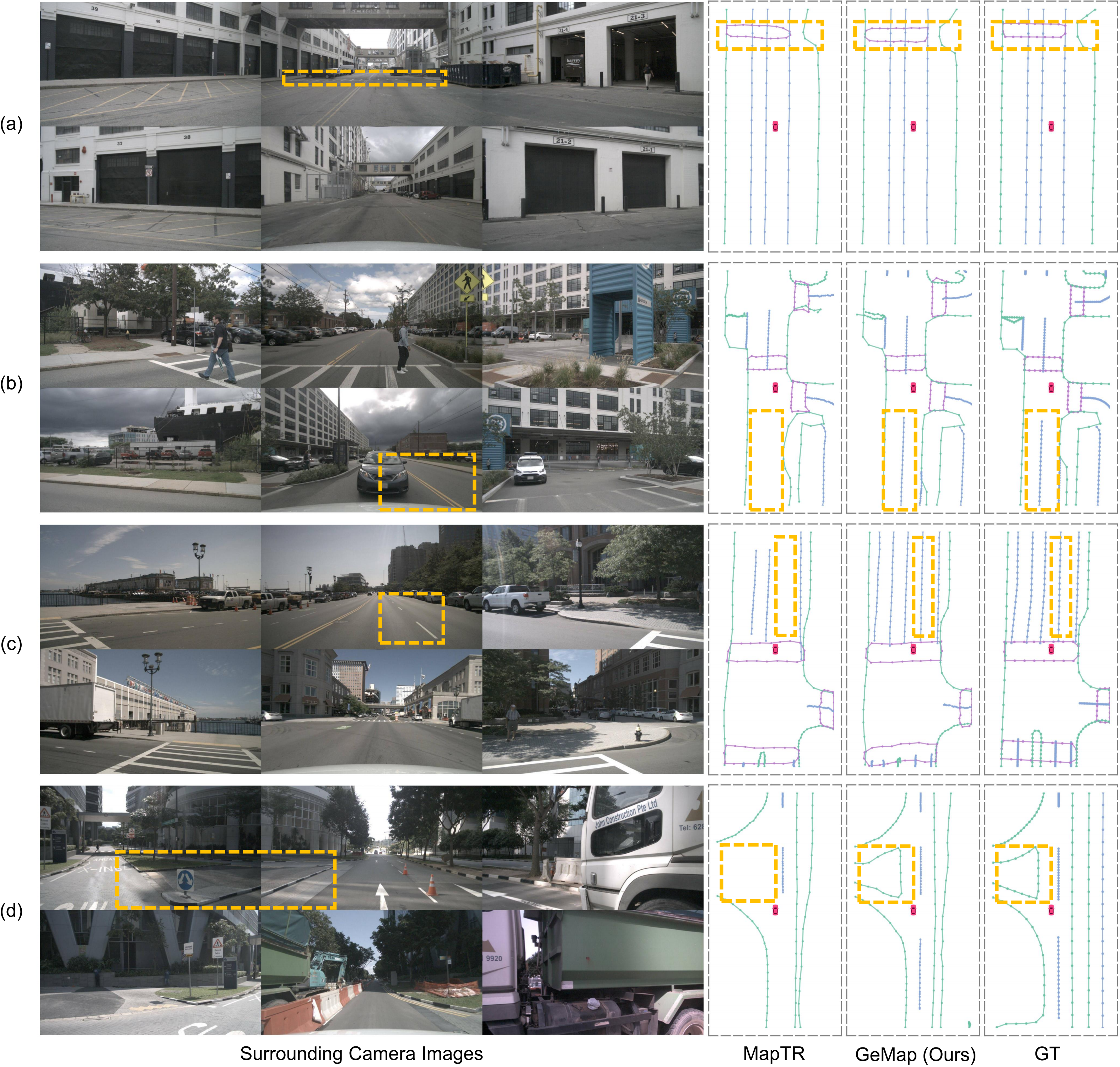

Scenario (a) depicts a complex triangular road boundary with an extended tail. Scenarios (b) and (c) depict pedestrian crossings that can only be partially observed. In scenario (d), the BEV map is tilted and lane markings are obscured by vehicles. These challenging cases indicate the superiority and robustness of GeMap.

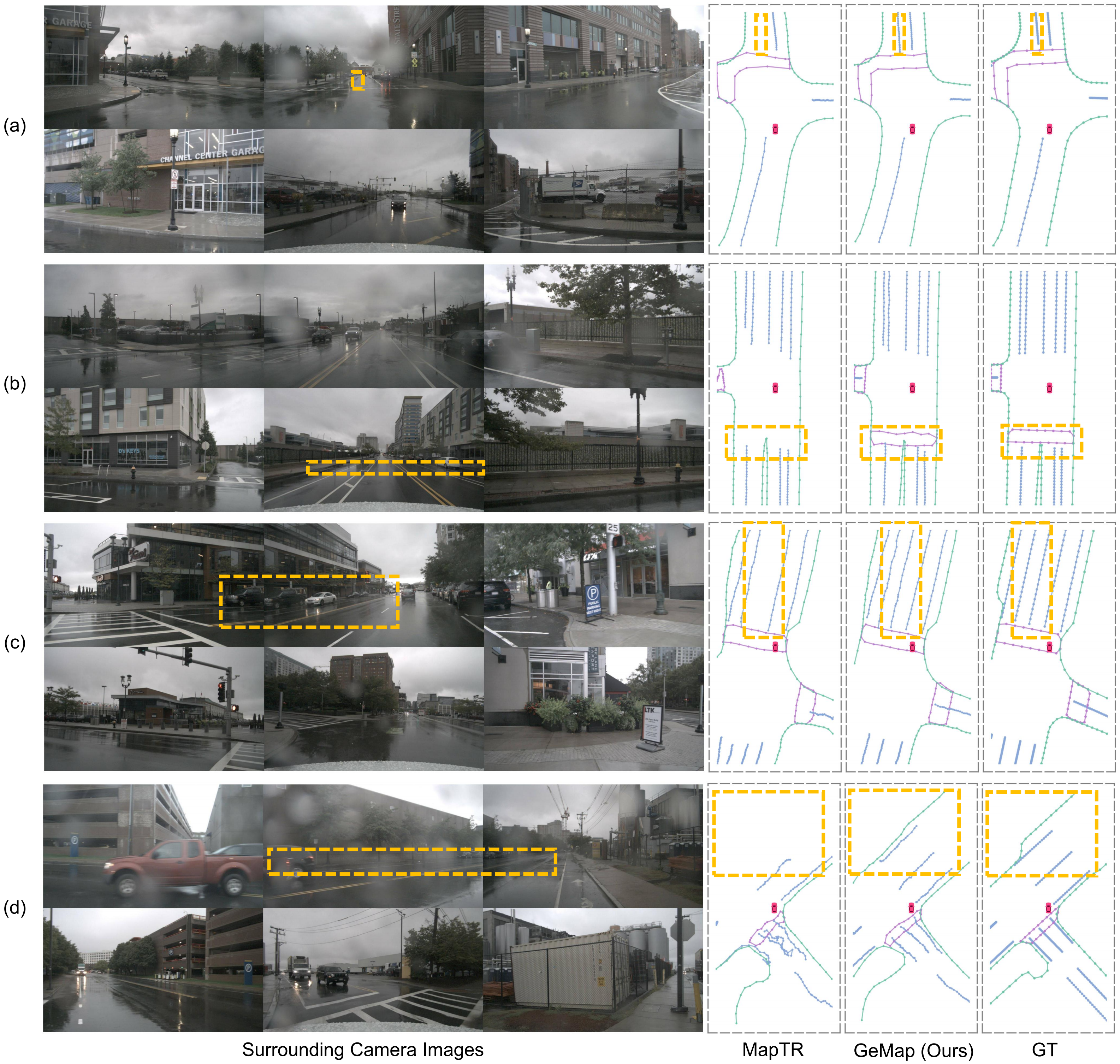

Visualization results under challenging rainy weather conditions. Even with noisy reflections on the road and map instances occluded by water drops, our method still provides robust predictions.

Visualization results under sunny weather conditions.

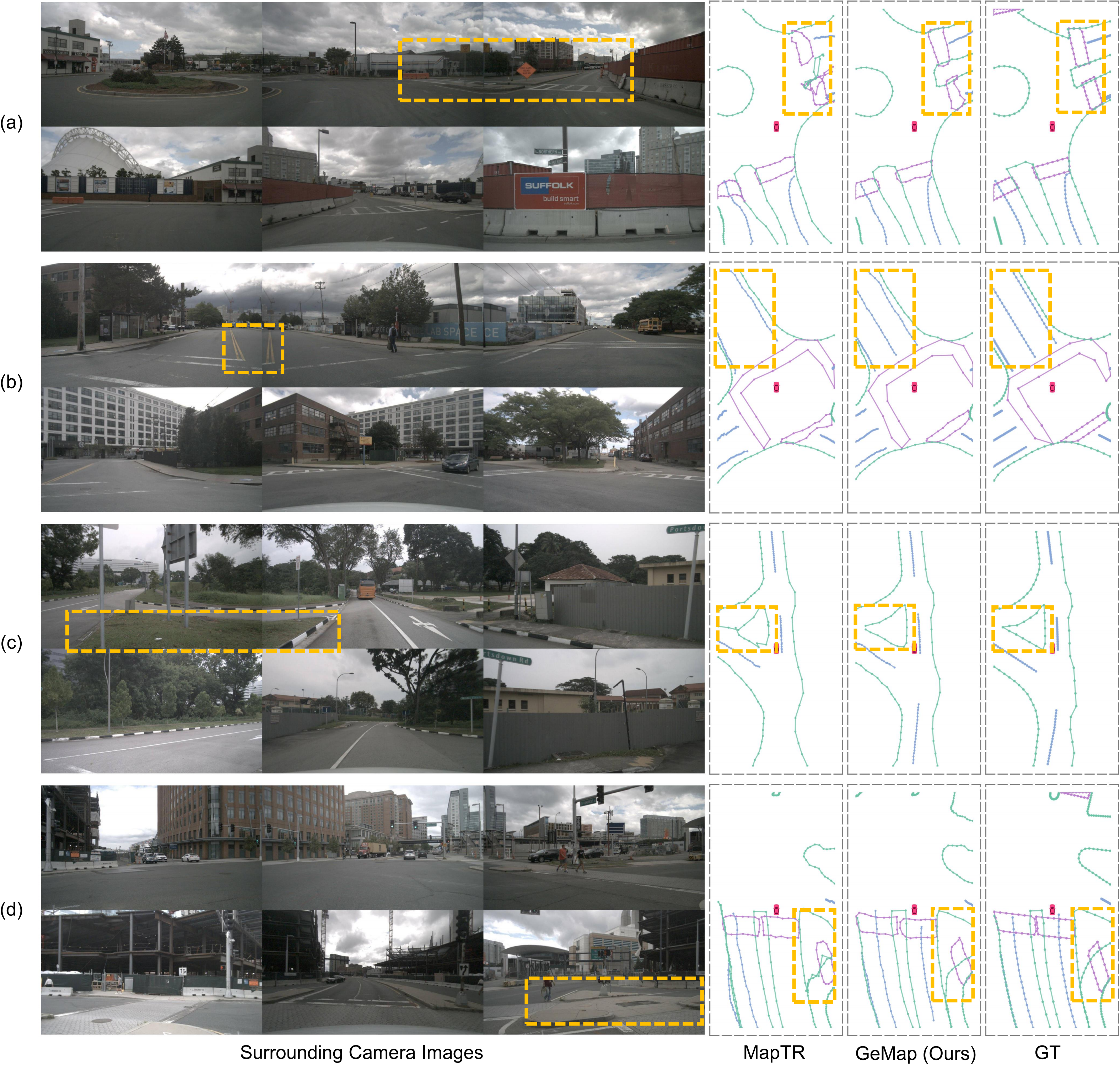

Visualization results under cloudy weather conditions.

@article{zhang2023online,

title={Online Vectorized HD Map Construction using Geometry},

author={Zhang, Zhixin and Zhang, Yiyuan and Ding, Xiaohan and Jin, Fusheng and Yue, Xiangyu},

journal={arXiv preprint arXiv:2312.03341},

year={2023}

}