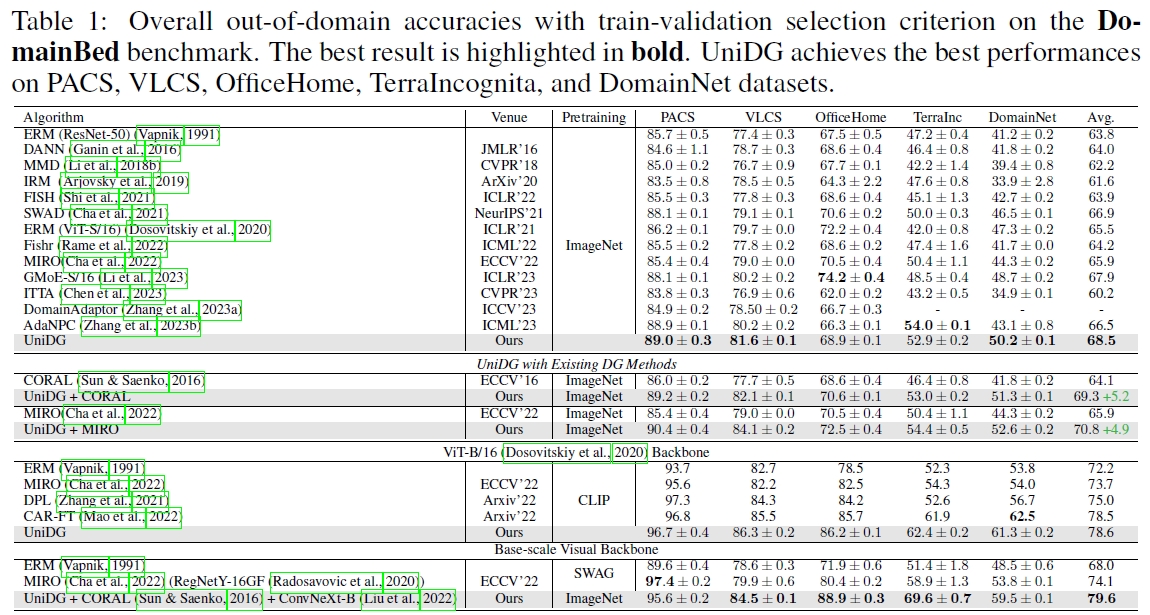

We conduct experiments on with DomainBed benchmark on PACS, VLCS,

OfficeHome, TerraIncognita, and DomainNet datasets.

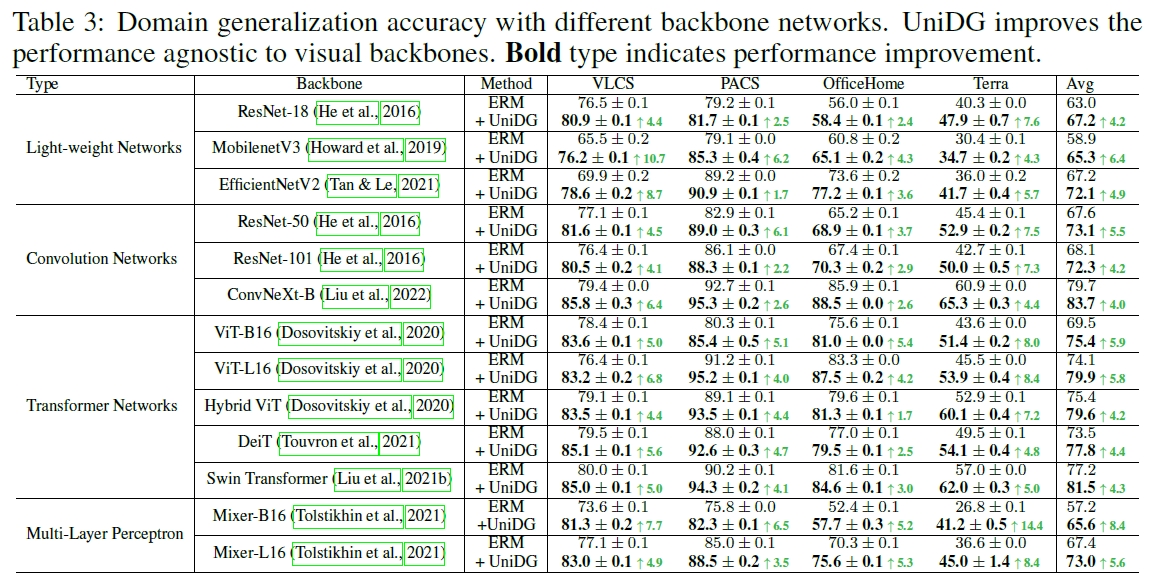

We evaluate UniDG by taking 3 parallel trials with random seeds to calculate means

and standard errors of classification accuracy (%) on 5 datasets.

UniDG prominently achieves a brilliant performance on Domain generalization tasks. Table 1 shows

the performances of the existing advanced approaches for DG tasks using different pre-training

methods. Impressively, with only ImageNet pre-training, UniDG outperforms the CAR-FT model with CLIP

pre-training by 1.1% in the average accuracy (79.6% vs. 78.5%). On the terrain data set with complex

domain shift, the accuracy of UniDG reached 62.4%, outperforming CAR-FT by 0.5%.

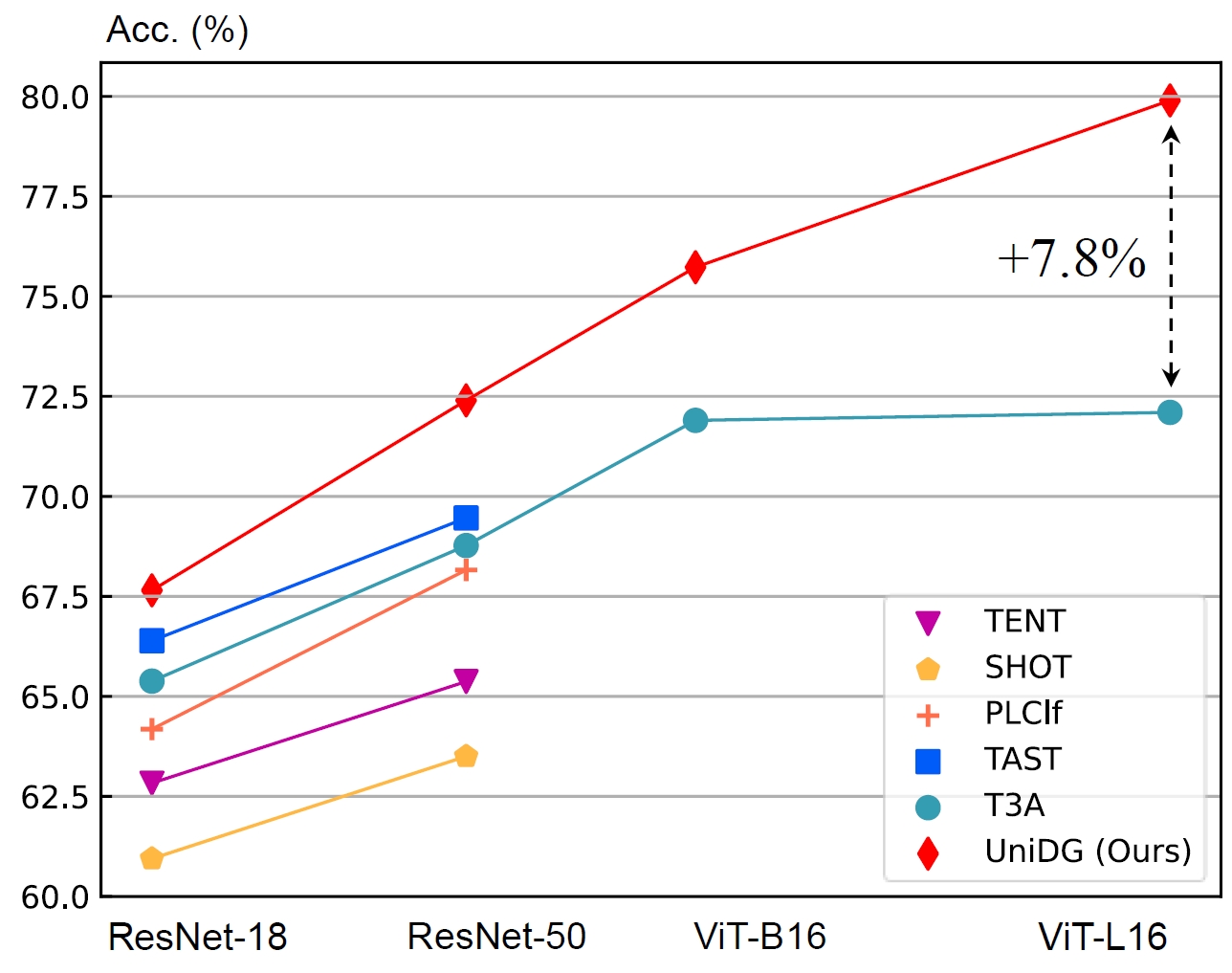

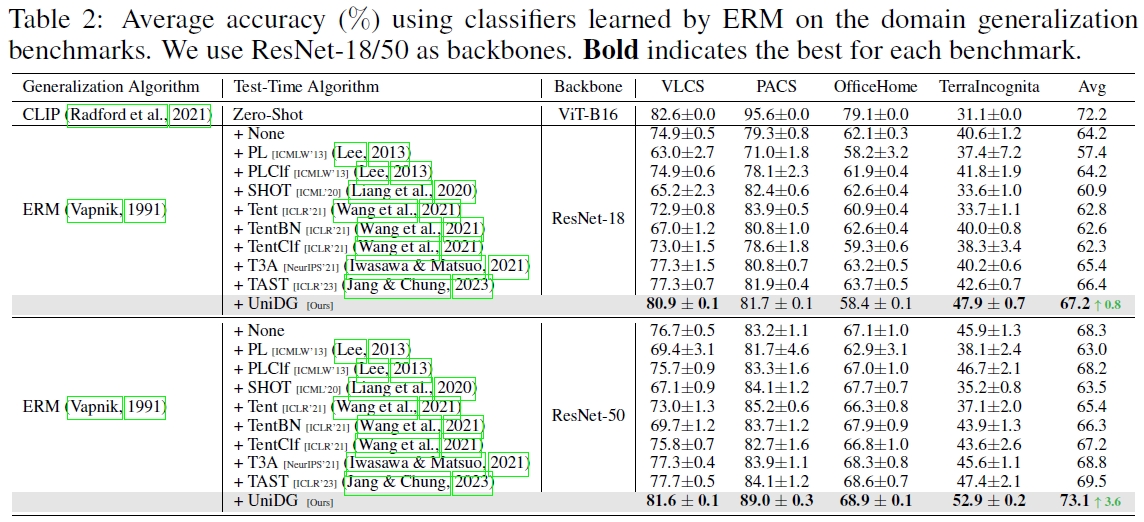

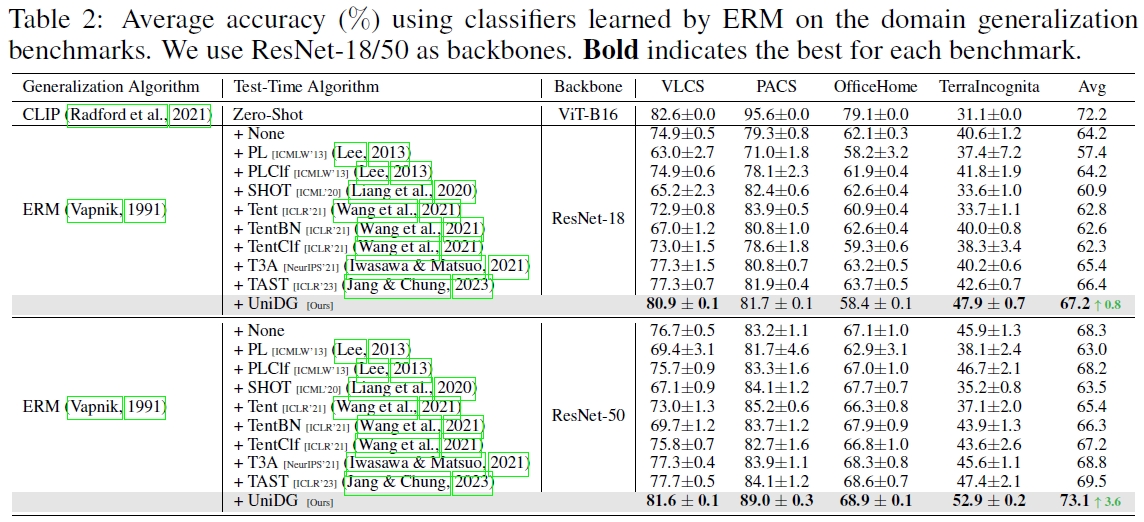

UniDG remarkably outperforms all existing test-time methods including the state-of-the-art method,

TAST (Jang & Chung, 2023). Specifically, as shown in Table 2, we choose ResNet-18 and ResNet-

50 as the backbone and average accuracy as the metric to evaluate several test-time methods. UniDG

achieves an average accuracy of 67.2% with ResNet-18 on VLCS, PACS, OfficeHome, and TerraInc datasets.

UniDG remarkably outperforms all existing test-time methods including the state-of-the-art method,

TAST (Jang & Chung, 2023). Specifically, as shown in Table 2, we choose ResNet-18 and ResNet-

50 as the backbone and average accuracy as the metric to evaluate several test-time methods. UniDG

achieves an average accuracy of 67.2% with ResNet-18 on VLCS, PACS, OfficeHome, and TerraInc datasets.

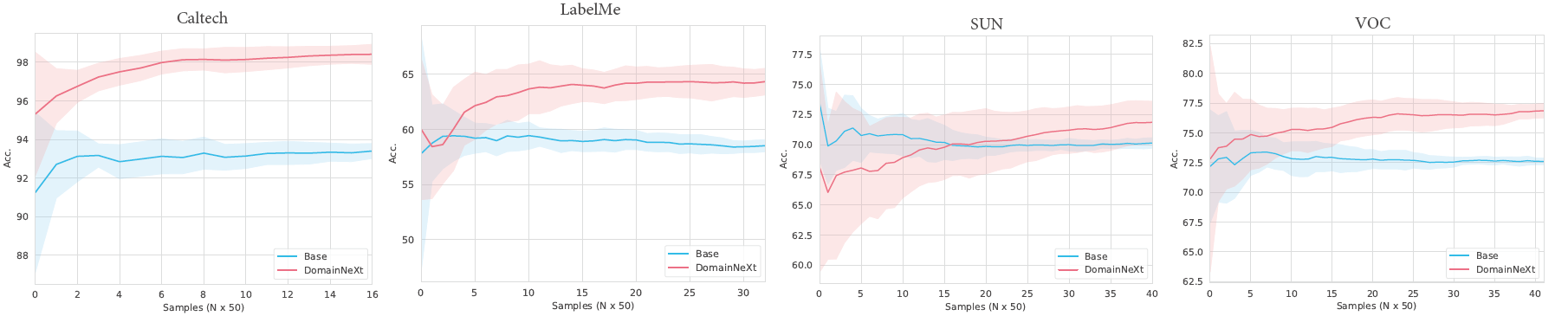

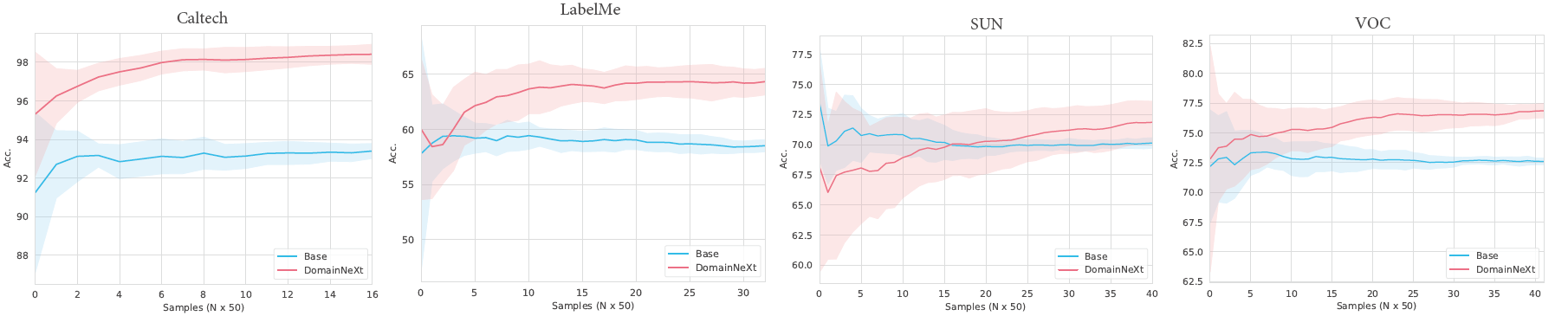

Accuracy accumulation curves on VLCS. UniDG outperforms the base ERM model by about 5% in accuracy. Note we randomly select 10 different trial seeds for better comparison.